Make Cloud Costs Everyone’s Responsibility

Transform your engineering teams from AWS & Azure cost firefighters into true cost owners. CloudForecast helps DevOps, SRE, and Infrastructure leaders avoid bill shock and share cost accountability across their teams.

No Credit Card is Required for a Free Trial.

Companies who have eliminated cloud cost surprises and saved $1M+ annually by empowering their engineering teams to own their costs.

Why Teams Choose CloudForecast

Clarity and accountability in cloud costs, built for engineering teams

Turn AWS & Azure Cost Surprises Into Predictable Wins

Misconfigurations and forgotten resources happen.

CloudForecast makes them visible early—and helps you become the leader who solved the cost problem.

Scale Your Engineering Teams Without Scaling Your Headaches

As your company grows from 50 to 500+ employees, your cloud costs become impossible to manage centrally.

Empower each team to own their costs with CloudForecast Daily Cost Group Reports.

Transform from Cost Firefighter to Strategic Leader

Don’t carry the weight of cloud costs alone.

Empower your engineering teams to take ownership—and become the leader who solved the cost problem

Engineering Leaders Who Solved the Cloud Cost Problem

See why Engineering, DevOps, SRE, and Infra teams at top companies trust CloudForecast as their AWS & Azure cost management tool.

How BigBasket Achieved 99% Tagging Coverage with CloudForecast

BigBasket achieved 99% AWS tagging compliance with CloudForecast, transforming cost control from reactive to proactive. By automating tagging, monitoring, and cost reporting for 20+ teams, they gained complete visibility, saved time, and created a culture of accountability in cost management.

How GroundTruth Achieved 32% Annualized Savings With CloudForecast

CloudForecast has helped the GroundTruth engineering team focus on the right areas and prioritize appropriately. As a result, they exceeded their cost-optimization goals for 2023, eclipsing 32% in annualized savings.

Say Goodbye to Unexpected AWS & Azure Costs

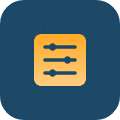

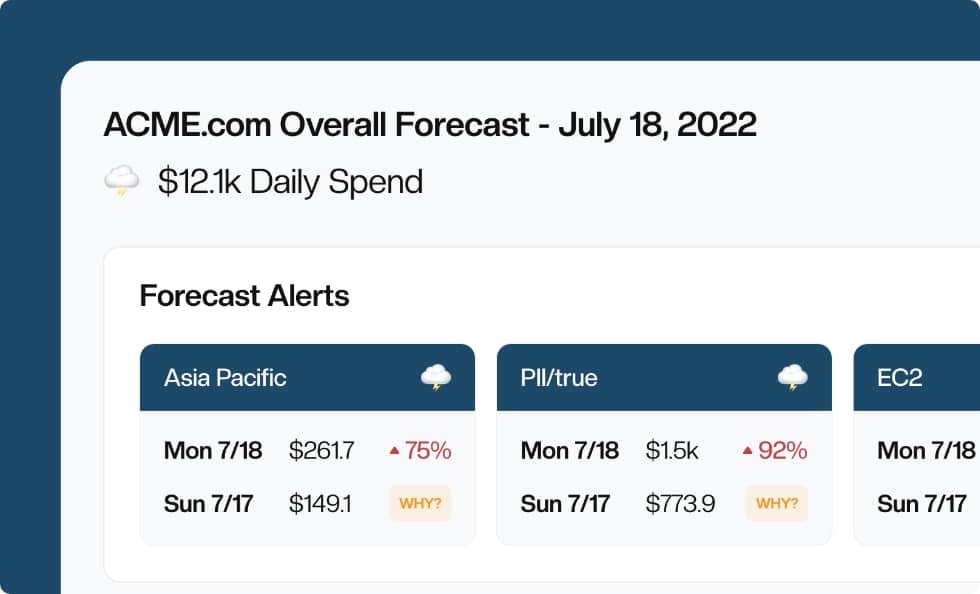

24/7 Bill Shock Prevention

Cloud cost management shouldn’t be a guessing game. Complex dashboards and manual reporting slow teams down, making it hard to get the right data to the right stakeholders.

CloudForecast makes AWS & Azure cost visibility easy with proactive Cost Group Reports via email, Slack or Teams, ensuring each team gets the data they need in the way they need it—so they can take ownership of their cloud costs without the extra work.

-

Daily Cloud Cost Group Reports

-

Proactive Reports via Email, Slack and Teams

-

Monthly AWS Financial Reports

-

EKS and ECS Cost Visibility

Turn Engineers into Cost Optimizers

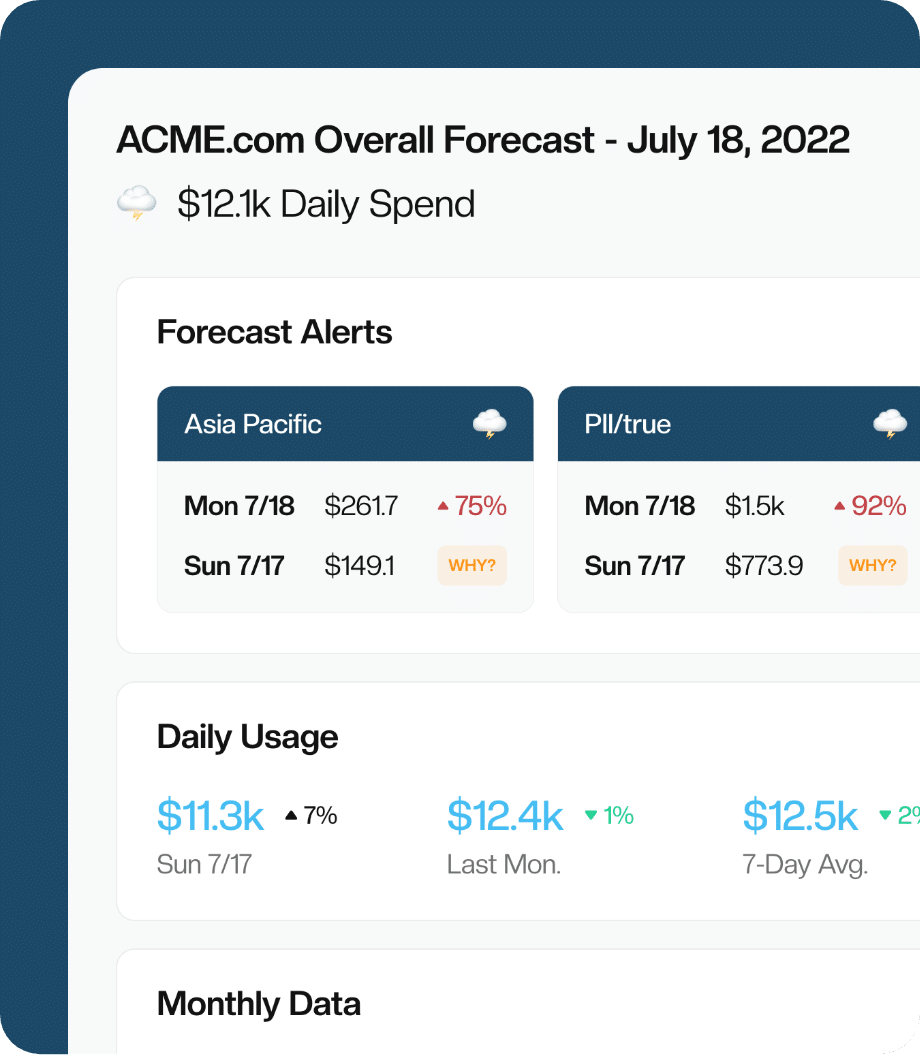

Digging through AWS & Azure cost data shouldn’t be hours of detective work. Most tools flood teams with irrelevant details, making it hard to pinpoint the root cause of cost spikes and inefficiencies.

CloudForecast cuts through the noise, surfacing only the insights that matter—so your team can take quick, informed action.

-

ZeroWaste Cost Optimization Report

-

Cloud Cost Detective Tool

-

"Why?" Cost Overrun Summaries

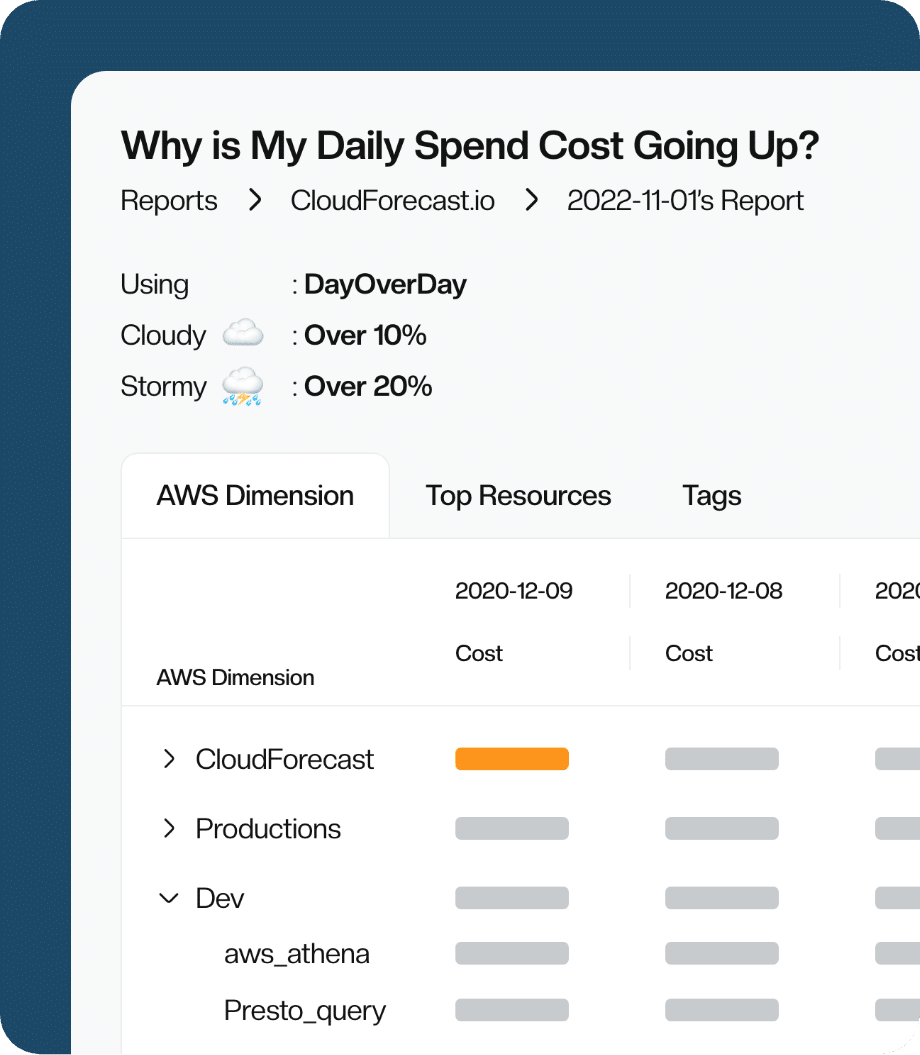

AWS & Azure Cost Allocation

Scale from 3 Teams to 25+ Teams

Without proper tagging, teams struggle to track spending, allocate costs, and ensure financial accountability.

CloudForecast helps teams clean up tagging and allocate AWS & Azure costs effortlessly, so you can quickly identify untagged resources and ensure every dollar is accounted for.

-

Tagging Compliance Report

-

Slice/Dice costs by sub-accounts, tags or cost categories

-

Custom Report Buildout for Finance Teams

Engineering, DevOps, SRE and Infra Teams Love Us

We earn our customers' support and trust by being relentlessly helpful, as evidenced by the testimonials from our users.

4.9

Simplify AWS & Azure Cost Management

We would love to learn more about the problems you are facing around AWS and Azure costs. Connect with us directly, and we’ll schedule a time to chat!

Skeptical Developers & Engineering Managers Have Asked…

Still can’t find what you’re looking for?