AWS SageMaker Pricing Guide – Cost Breakdown & Optimization Tips

There’s no way to sugarcoat it: AWS SageMaker pricing is confusing. If you’ve ever stared at the AWS SageMaker pricing page and thought, “So how much is this actually going to cost me?”– you’re not alone. With so many different features, instance types, and pricing models, forecasting your SageMaker bill can feel like having to solve a machine learning problem before you even get to train a model.

But don’t worry! That’s why this guide exists.

Whether you’re a startup looking to train your first model or a large enterprise running production ML workflows, this article will walk you through everything you need to know about SageMaker pricing. After walking through some real-world pricing examples, we’ll also cover actionable tips to cut costs.

AWS SageMaker Pricing Model Overview

AWS SageMaker mainly uses a pay-as-you-go model. But if it were truly that simple, you wouldn’t be reading this article. There are many other costs to consider, such as compute instances, storage, data transfers, and API calls. Some services within SageMaker charge based on instance hours, while others bill for requests, storage, and data processed.

We’ll break down all those nitty-gritty details soon. But first, there’s an important update to cover: AWS recently introduced Amazon SageMaker Unified Studio, which reshapes how SageMaker (and its pricing) works.

SageMaker Unified Studio vs. SageMaker AI

In December 2024, AWS launched SageMaker Unified Studio, an all-in-one AI and data analytics platform. It combines ML development with AWS analytics tools like Amazon EMR, Glue, and Redshift–all in one single interface. This, of course, means that costs for using SageMaker Unified Studio depend on your usage of those included services as well.

Meanwhile, the original SageMaker service has been rebranded as SageMaker AI, focusing specifically on ML tasks like training and deploying models. If you’re just here for machine learning, you’ll primarily use SageMaker AI. For a broader data pipeline, Unified Studio offers a fully-integrated experience.

Why does this matter? Because your SageMaker costs now depend on which “version” of SageMaker you’re using.

To keep things simple, we’ll focus primarily on SageMaker AI costs for the rest of this article, since that’s where most of the complexity lies. Just remember that using Unified Studio incurs additional costs for its extra services.

SageMaker AI Free Tier: What’s Included?

Let’s start with some good news! Like all other AWS services, SageMaker AI offers a Free Tier to help you get started at no cost for the first two months. But like most Free Tier offerings, the details matter because usage limits vary depending on what features you’re using.

Here’s a quick breakdown of what’s included each month, for the first two months:

| Service | Free Tier Allowance | Instance Type |

| Studio Notebooks | 250 hours | ml.t3.medium, ml.t2.medium |

| RStudio on SageMaker | 250 hours (RSession) + 1 free instance (RStudioserverPro) | ml.t3.medium |

| Data Wrangler | 25 hours | ml.m5.4xlarge |

| Feature Store | 10M write units, 10M read units, 25GB storage | N/A |

| Training | 50 hours | ml.m4.xlarge, ml.m5.xlarge |

| TensorBoard | 300 hours | ml.r5.large |

| Real-Time Inference | 125 hours | ml.m4.xlarge, ml.m5.xlarge |

| Serverless Inference | 150,000 seconds of on-demand inference duration | N/A |

| Canvas | 160 hours of session time | N/A |

| HyperPod | 50 hours | ml.m5.xlarge |

If you’re just getting started with SageMaker, the Free Tier gives you enough room for experimentation. But once those two months are up, or if you exceed Free Tier usage, it’s important to understand what you’ll be paying for next.

SageMaker On-Demand Pricing

On-demand pricing is where things start to get a little complicated. In the On-demand pricing section on the SageMaker AI pricing page, you’ll notice that costs vary greatly by service. You can reference this table for exact pricing, but here’s a summary of how you’re billed based on the type of service you’re using:

| Service Type | On-Demand Pricing Model | Cost Factor | Example SageMaker Service |

| Training Jobs | Pay by the hour for compute time | EC2 instance type | Training, Notebook Instances, RStudio |

| Inferencing | Pay by the hour for running instances | EC2 instance type | Real-Time Inference, Asynchronous Inference |

| Data Storage | Pay per GB stored per month | S3 Standard, S3 Glacier | Feature Store |

| Data Transfer | Pay per GB transferred out of AWS | Data processed | Any service transferring data externally |

We’ll walk through specific pricing examples soon. For now, understand that compute instances are billed by the hour, and storage and data transfers out are charged per GB of data.

SageMaker Request-Based Pricing

Some SageMaker AI services are billed based on the number of API requests, data processed, or inference invocations. This means that your costs scale with actual usage, rather than just compute time or storage.

The main features you’ll use for most AI tasks here include Feature Store and Data Wrangler. Again, refer to the SageMaker AI pricing page for exact numbers, but here’s a high-level breakdown of how the pricing works:

| SageMaker Service | On-Demand Pricing Model | Cost Factor |

| Feature Store | Pay per million reads/writes | Number of API calls |

| Data Wrangler | Pay per GB of processed data | Data processed |

Note that it’s possible for some services to show up in both on-demand and request-based pricing. Feature Store is one such example. This is because Feature Store’s pricing model depends both on on-demand storage used as well as request-based usage.

AWS SageMaker Real-World Pricing Examples

Now that you understand the foundation of how SageMaker services are priced, let’s break down real-world SageMaker pricing scenarios so you can estimate what your bill might look like.

Example 1: Startup Training a Simple Model

Let’s say you’re a small AI startup building a product image classifier for an e-commerce platform. You have a dataset of 1 million images (at 100KB per image, this is approximately 100 GB). You want to train and use a CNN-based image classifier based on this dataset, and you’re wondering if SageMaker can get the job done within budget. Here are your parameters and assumptions for this job:

- Training instance: ml.m5.xlarge ($0.23/hour). This is a popular choice that provides excellent value for small to medium jobs.

- Training time: 150 hours. Training time can vary a lot depending on complexity, but 150 hours is a reasonable baseline.

- Dataset Size: 100GB.

- Real-time inference: After the model is trained, we expect requests to classify images on-demand per day. We’ll use the cheaper ml.m5.large ($0.115/hour) for on-demand inference.

- Additional costs: For simplicity, we’ll assume no other costs such as data transfers out.

As we saw earlier, model training and real-time inference will cost instance time, billed per hour. In addition, we’ll incur S3 storage costs for the dataset. Let’s calculate all these costs in the table below:

| Service Type | Pricing Model | Usage | Estimated Cost |

| Model Training | $0.23/hour (ml.m5.xlarge) | 150 hours | $34.50 |

| Real-Time Inference (Compute) | $0.115/hour (ml.m5.large) | 24/7 uptime for one month (720 hours) | $82.80 |

| Storage (S3) | $0.023/GB/month | 100GB | $2.30 |

| Total Monthly Cost | $119.60 |

That wasn’t so bad! All things considered, this is a decent price to pay for this training and inference task.

Example 2: Enterprise Running a Production ML Model

In this next example, you’re a large company using SageMaker AI to train and deploy a deep learning model that processes customer behavior data. Here are the parameters and assumptions for this more complex job:

- Training instance: ml.p4d.24xlarge ($37.688/hour). A powerful machine for production-scale training jobs.

- Training time: 500 hours. Again, training time can vary a lot, but we’ll assume 500 hours (about 21 days) for the main training plus hyperparameter tuning over the course of a month.

- Data Wrangler: We’ll assume 50 hours of data processing via Data Wrangler before training.

- Feature Store: We’ll assume 2TB storage, 1B reads and writes for feature storage during training.

- Dataset Size: 20TB. That’s a good chunk of customer data!

- Real-time inference: After the model is trained, we’ll use the cheaper ml.g5.xlarge ($2.03/hour) for on-demand inference.

- Additional costs: For simplicity, we’ll assume no other costs such as data transfers out.

Here’s a calculation of all these costs:

| Service Type | Pricing Model | Usage | Estimated Cost |

| Model Training | $37.688/hour (ml.p4d.24xlarge) | 500 hours | $18,844.00 |

| Data Wrangler | $0.922/hour (ml.m5.4xlarge) | 50 hours | $46.10 |

| Feature Store | $0.45/GB (storage)$1.25/million writes$0.25/million reads | 2TB storage1B writes1B reads | $900 (storage)$1,250 (writes)$250 (reads) |

| Real-Time Inference (Compute) | $2.03/hour (ml.g5.4xlarge) | 24/7 uptime for one month (720 hours) | $1,461.60 |

| Storage (S3) | $0.023/GB/month | 20TB | $460 |

| Total Monthly Cost | $23,211.70 |

This is definitely a much heftier bill, and training is the biggest cost: deep learning models using GPUs can get real expensive!

AWS SageMaker Cost Optimization Strategies

Now, let’s talk about keeping costs under control. There are plenty of ways to optimize your SageMaker spending without sacrificing too much performance.

Instance Rightsizing

The best way to save money is to choose the most appropriate instance for your use case. For example, if you initially pick a ml.p3.2xlarge ($3.825/hour) for training, but see that GPU utilization is consistently below 30%, you might decide to switch to the ml.g5.2xlarge ($1.515/hour) instead. You just cut your costs by a factor of 60%.

But with over 100 instance types to choose from, this is easier said than done, right? For ML tasks specifically, you could consider the following questions:

- Do you need a GPU? Traditional ML models run fine on CPUs. GPU instances are significantly more expensive, so only use them on training deep learning models that need the parallel processing juice.

- How long will your training job take? You might consider choosing a smaller instance to conduct some quick tests to help you estimate how long training would take on a larger instance. Do this before committing to an expensive machine.

Additionally, take advantage of AWS’s built-in monitoring solutions. For example, you could use AWS CloudWatch to track key usage metrics for your instances. Set usage thresholds and alarms to let CloudWatch notify you of potential improvements you can make to your instance choices.

Utilizing SageMaker Savings Plans

If you have predictable workloads and can commit to SageMaker over an extended period of time (typically at least 1-3 years), a Machine Learning Savings Plan can significantly lower your costs compared to on-demand pricing. In the enterprise-level example from earlier, committing to a 1-year SageMaker Savings Plan can reduce training and inference costs by 50% or more.

Savings Plans are the primary way to save on training, which is often the most expensive aspect of using SageMaker. Because of this, if you have many models to train in the near future, the math tends to work out greatly in your favor.

Batch Transform vs. Real-Time Inference

Many developers and teams default to real-time inference, but batch processing can be cheaper if you don’t need instant predictions. This is due to the difference in usage between these two features. While instance rates are mostly the same across the board, real-time inference requires that your instance be online 24/7. With Batch Transform, you only pay for when you’re actually using the instance for inference tasks.

Depending on how you take advantage of this, you can cut your inference costs by 80% or even more!

Conclusion

AWS SageMaker pricing can seem daunting at first. But once you understand a few key concepts about how the pricing models work, it’s not too difficult to navigate. Moreover, you can keep costs under control with the right strategies, including rightsizing instances, considering Savings Plans, and optimizing inference workloads.

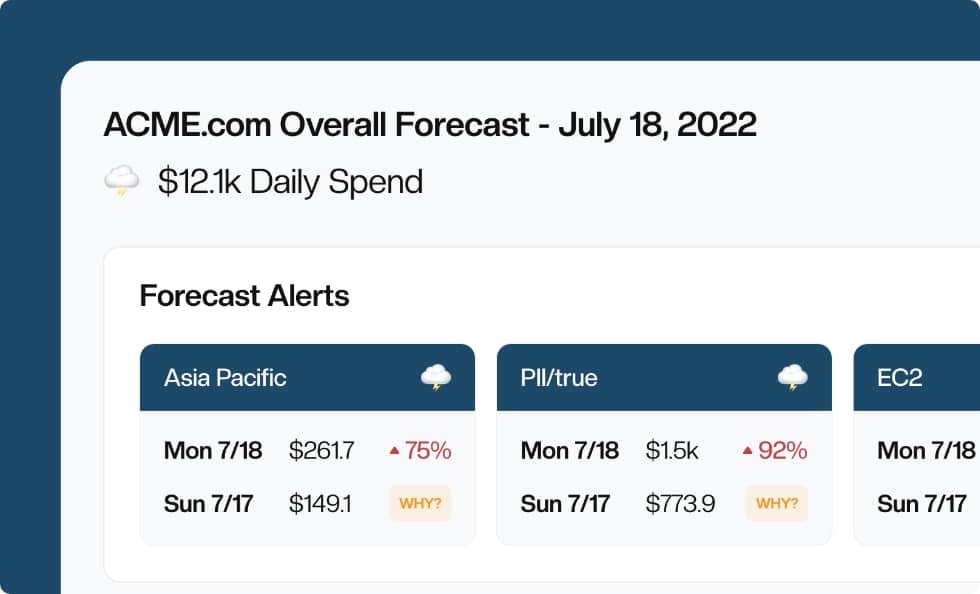

However, keeping track of all these cost factors manually can be a whole other beast. That’s where CloudForecast comes in. CloudForecast helps you track, forecast, and optimize your SageMaker costs so you can stay on top of your AWS spending without all the headaches. Try it out today and take the guesswork out of your AWS cost management.

Manage, track, and report your AWS spending in seconds — not hours

CloudForecast’s focused daily AWS cost monitoring reports to help busy engineering teams understand their AWS costs, rapidly respond to any overspends, and promote opportunities to save costs.

Monitor & Manage AWS Cost in Seconds — Not Hours

CloudForecast makes the tedious work of AWS cost monitoring less tedious.

AWS cost management is easy with CloudForecast

We would love to learn more about the problems you are facing around AWS cost. Connect with us directly and we’ll schedule a time to chat!