Using AWS Athena To Convert A CSV File To Parquet

Running into issues with using Athena to convert a CSV file to Parquet or have a random AWS question? We would love to help if we can, for free. Check us why do we it here, schedule a time with us via our calendly link or drop us an email at [email protected].

Amazon Athena is a powerful product that allows anyone with SQL skills to analyze large-scale datasets in seconds without the need to set up complex processes to extract, transform, and load the data (ETL).

I wrote about AWS Athena in my last two blog posts: Watch Out For Unexpected S3 Cost When Using AWS Athena and Using Parquet On Amazon Athena For AWS Cost Optimization, and I wanted to follow up on a not so common feature of Athena: The ability to transform a CSV file to Apache Parquet for really cheap!

Transforming a CSV file to Parquet is not a new challenge and it’s well documented by here and here. All these options are great and can be used in production, but they all require the use of things like AWS EMR, Spark or AWS Glue. If you want to check out Parquet or have a one-off task, using Amazon Athena can speed up the process.

Thanks to the Create Table As feature, it’s a single query to transform an existing table to a table backed by Parquet.

To demonstrate this feature, I’ll use an Athena table querying an S3 bucket with ~666MBs of raw CSV files (see Using Parquet on Athena to Save Money on AWS on how to create the table (and learn the benefit of using Parquet)).

Here is the query to convert the raw CSV data to Parquet:

1 2 3 4 5 6 |

CREATE TABLE flights.athena_created_parquet_snappy_data

WITH (

format = 'PARQUET',

parquet_compression = 'SNAPPY',

external_location = 's3://{INSERT_BUCKET}/athena-export-to-parquet'

) AS SELECT * FROM raw_data

|

Since AWS Athena only charges for data scanned (in this case 666MBs), I will only be charged $0.0031 for this example.

The data is now available in my new table flights.athena_created_parquet_snappy_data:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 |

CREATE EXTERNAL TABLE `athena_created_parquet_snappy_data`( `year` smallint, `month` smallint, `day_of_month` smallint, `flight_date` string, `op_unique_carrier` string, `flight_num` string, `origin` string, `destination` string, `crs_dep_time` string, `dep_time` string, `dep_delay` double, `taxi_out` double, `wheels_off` string, `arr_delay` double, `cancelled` double, `cancellation_code` string, `diverted` double, `air_time` double, `carrier_delay` double, `weather_delay` double, `nas_delay` double, `security_delay` double, `late_aircraft_delay` double) ROW FORMAT SERDE 'org.apache.hadoop.hive.ql.io.parquet.serde.ParquetHiveSerDe' STORED AS INPUTFORMAT 'org.apache.hadoop.hive.ql.io.parquet.MapredParquetInputFormat' OUTPUTFORMAT 'org.apache.hadoop.hive.ql.io.parquet.MapredParquetOutputFormat' LOCATION 's3://cf-flight-data-2018/athena-export-to-parquet' TBLPROPERTIES ( 'has_encrypted_data'='false', 'parquet.compression'='SNAPPY') |

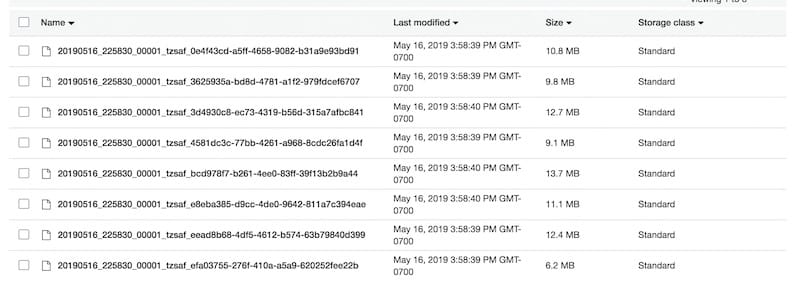

But also in AWS S3:

This is just the tip of the iceberg, the Create Table As command also supports the ORC file format or partitioning the data.

Amazon Athena wasn’t designed to replace Glue or EMR, but if you need to execute a one-off job or plan to query the same data over and over on Athena, then you may want to use this trick.

If you have questions about CloudForecast to help you monitor your AWS cost, or questions about this post, feel free to reach out via email [email protected] or by Twitter: @francoislagier. Also, follow our journey @cloudforecast

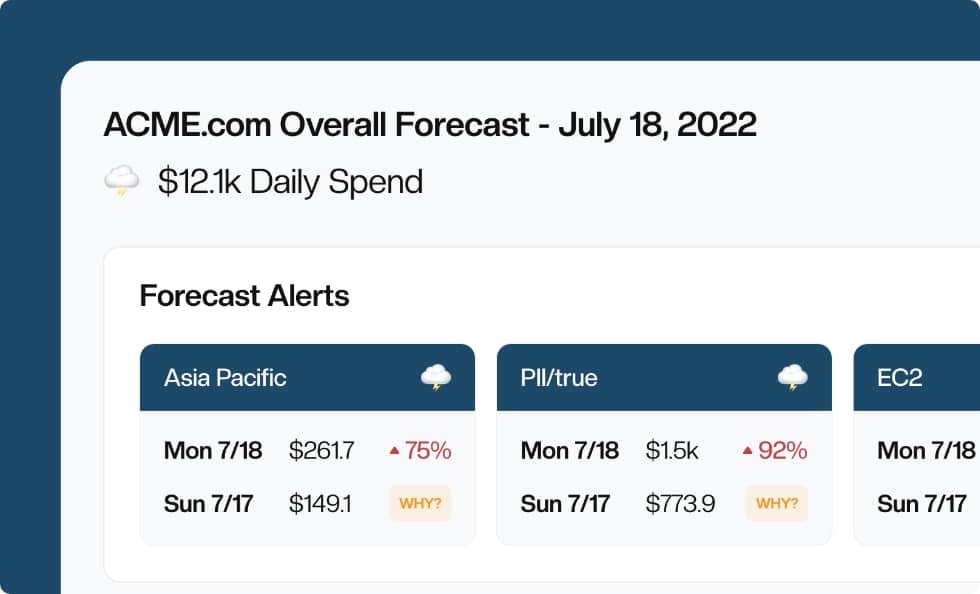

Manage, track, and report your AWS spending in seconds — not hours

CloudForecast’s focused daily AWS cost monitoring reports to help busy engineering teams understand their AWS costs, rapidly respond to any overspends, and promote opportunities to save costs.

Monitor & Manage AWS Cost in Seconds — Not Hours

CloudForecast makes the tedious work of AWS cost monitoring less tedious.

AWS cost management is easy with CloudForecast

We would love to learn more about the problems you are facing around AWS cost. Connect with us directly and we’ll schedule a time to chat!